Did you know there is a technique in Deep Learning (DL) that doesn’t require large data sets and extremely long training times? It’s called Transfer Learning and the fact if you have done any “Hello World” image detection examples or followed my Tensorflow TFLeanring Course you have already used. Data Teams with Data Engineers and Data Scientist should know Transfer Learning. Let us jump into understanding Transfer Learning.

What is Transfer Learning

Transfer Learning is a Machine Learning (ML) technique that focusing on storing knowledge gained from one problem and applying to another related problem. Data Scientist start by building one model then use that same model as the starting point for a new model. Typically the secondary model is a related problem but not always. For example, let us take a model that was built by our friend Dwight to detect images of bears. Now Dwight has used that model to try and figure out how to identify the best bear. Part of that model does image detection it identifies a bear.

Dwight can now share his model with his best friend Jim who wants to build a model to detect dogs. Since the model that Dwight has already been pre-trained Jim can reduce his time in training.

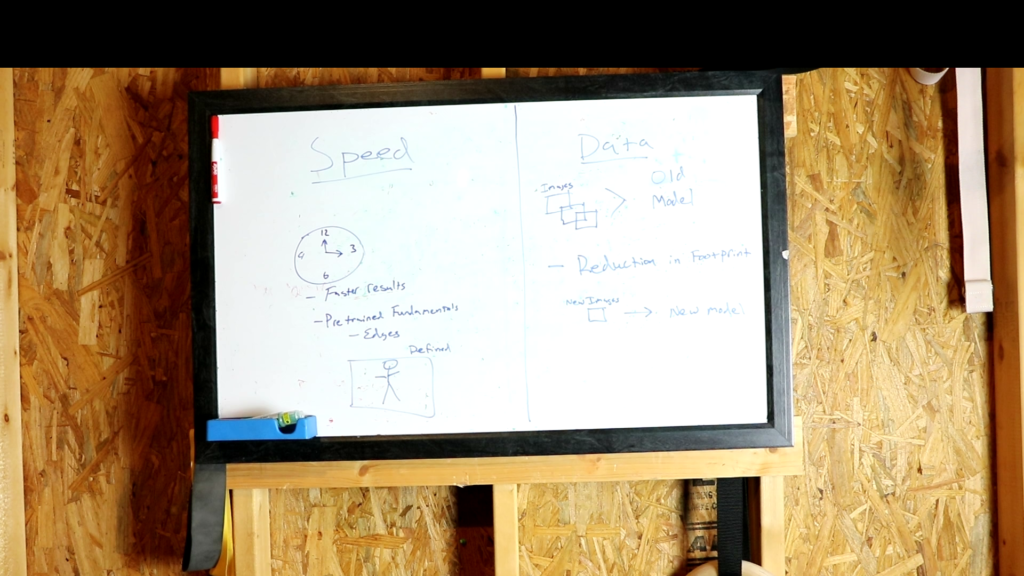

Transfer Learning speeds up time to results (does not guarantee results😊)

The second thing you need to know about Transfer Learning speeds up time to results. Think of Transfer Learning as a framework in programing languages. When I was a Web Developer in .NET community, I could build features within my Web Application quicker using .NET functions already built in. For example, connecting to a SQL data could be done using a built-in function called ConnectionString. The complicated details of building that connection to SQL server was abstracted away from me.

Using Transfer learning Data Teams are not starting from scratch which allows models to be built and trained faster. Just as frameworks allow to abstract away complexity, Transfer Learning is similar in that developers can focus on solving higher level problems. In our Bear detector example our friend Dwight has already done the hard work for building an image detector. Now Jim can change a few lines of code and build a new model.

Transfer Learning for Data Reduction

When we think of Deep Learning large data sets are what comes to mind. Transfer Learning allows Data Scientist to use smaller data sets to train models. By utilizing models already built for one task the model can then be retooled to solve a different problem. In our previous example of an image detector for Bears. How much data would need to be applied to create a new model to identify dogs? How about the Jetson Nano thumbs up or down project?

One area being impacted by Transfer Learning is Healthcare. Pretrained models are huge in helping with Healthcare models. For example, let us say there is a specific lung image detection model that is trained 80% of the way this is called a pretrained model. Data Teams can use this model to apply to their problem to take it the remaining 20% of way to train. Imagine one model trained to detect scar tissue can be used to detect other complex lung issues like Pneumothorax, Cancer, COPD, and more.

Most Computer Vision Already Incorporates Transfer Learning

For many reasons we have already discussed Object detection incorporated Transfer Learning. Edge detections is already designed. An Edge is the sharp contrast in a image. For example, the below is a photo of a Jim from the Office, notice where his brown tie meets his yellow shirt? This would be an edge. Tensorflow and other Deep Learning Frameworks come with functions ready to do object detection. Those function already incorporate models that can detect edges in images.

One example is in the Jetson Nano Getting Started Project where you can build a model to detect Thumbs Up or Thumbs Down. Out of the box we just use the pretrained model and add our data. For this model we are adding our own images of thumbs up and thumbs down. Using Transfer Learning allows for Jetson Nano users to quick build an image detection with minimal coding and data.

NVIDIA has a Transfer Learning Toolkit in it is 2nd Generation

We all know here at Big Data Big Questions we love the NVIDIA team. Well at NVIDIA’s GPU Cloud or NGC they have catalog of Deep Learning frameworks like we have just talked about. Whether you are looking to train a model for healthcare with their Clara Framework or Natural Language Processing (NLP) with BERT. Many of these models come pretrained to apply your data to solve your problem. Here is the NVIDIA official statement on the NVIDIA Transfer Learning Toolkit:

To enable faster and accurate AI training, NVIDIA just released highly accurate, purpose-built, pretrained models with the NVIDIA Transfer Learning Toolkit (TLT) 2.0. You can use these custom models as the starting point to train with a smaller dataset and reduce training time significantly. These purpose-built AI models can either be used as-is, if the classes of objects match your requirements and the accuracy on your dataset is adequate, or easily adapted to similar domains or use cases.

By using NVIDIA’s TLT 2.0 data teams can reduce development by up to 10X. Even cutting development times in half is a huge game changer for A.I. development.

Wrapping Up Transfer Learning

Transfer Learning is a powerful technique within Deep Learning for helping put models into production faster and with smaller data sets. The key application of Transfer Learning is building off previous training just like we do as humans. The first time I learned to program with Java was hard! Object-Oriented programing was new to me. However, over time I got better, then when I switched to C# for it was a lot easier to take in the concepts and learn. See I was building off my previous training in Java to learn C#.